How To Solve For An Eigenvector

Introduction

Eigenvectors and eigenvalues have many important applications in computer vision and machine learning in general. Well known examples are PCA (Principal Component Analysis) for dimensionality reduction or EigenFaces for face recognition. An interesting use of eigenvectors and eigenvalues is also illustrated in my post about error ellipses. Furthermore, eigendecomposition forms the base of the geometric interpretation of covariance matrices, discussed in an more recent post. In this article, I will provide a gentle introduction into this mathematical concept, and will show how to manually obtain the eigendecomposition of a 2D square matrix.

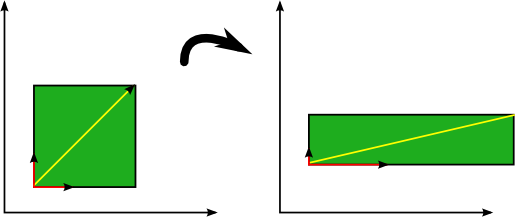

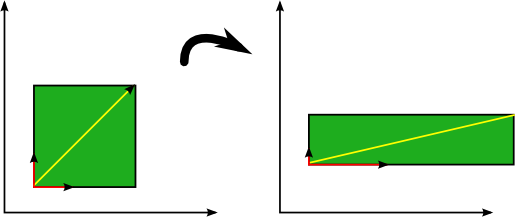

An eigenvector is a vector whose direction remains unchanged when a linear transformation is applied to it. Consider the image below in which three vectors are shown. The green square is only drawn to illustrate the linear transformation that is applied to each of these three vectors.

Eigenvectors (red) do not change direction when a linear transformation (e.g. scaling) is applied to them. Other vectors (yellow) do.

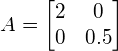

The transformation in this case is a simple scaling with factor 2 in the horizontal direction and factor 0.5 in the vertical direction, such that the transformation matrix ![]() is defined as:

is defined as:

.

.

A vector ![]() is then scaled by applying this transformation as

is then scaled by applying this transformation as ![]() . The above figure shows that the direction of some vectors (shown in red) is not affected by this linear transformation. These vectors are called eigenvectors of the transformation, and uniquely define the square matrix

. The above figure shows that the direction of some vectors (shown in red) is not affected by this linear transformation. These vectors are called eigenvectors of the transformation, and uniquely define the square matrix ![]() . This unique, deterministic relation is exactly the reason that those vectors are called 'eigenvectors' (Eigen means 'specific' in German).

. This unique, deterministic relation is exactly the reason that those vectors are called 'eigenvectors' (Eigen means 'specific' in German).

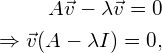

In general, the eigenvector ![]() of a matrix

of a matrix ![]() is the vector for which the following holds:

is the vector for which the following holds:

(1) ![]()

where ![]() is a scalar value called the 'eigenvalue'. This means that the linear transformation

is a scalar value called the 'eigenvalue'. This means that the linear transformation ![]() on vector

on vector ![]() is completely defined by

is completely defined by ![]() .

.

We can rewrite equation (1) as follows:

(2)

where ![]() is the identity matrix of the same dimensions as

is the identity matrix of the same dimensions as ![]() .

.

However, assuming that ![]() is not the null-vector, equation (2) can only be defined if

is not the null-vector, equation (2) can only be defined if ![]() is not invertible. If a square matrix is not invertible, that means that its determinant must equal zero. Therefore, to find the eigenvectors of

is not invertible. If a square matrix is not invertible, that means that its determinant must equal zero. Therefore, to find the eigenvectors of ![]() , we simply have to solve the following equation:

, we simply have to solve the following equation:

(3) ![]()

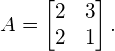

In the following sections we will determine the eigenvectors and eigenvalues of a matrix ![]() , by solving equation (3). Matrix

, by solving equation (3). Matrix ![]() in this example, is defined by:

in this example, is defined by:

(4)

Calculating the eigenvalues

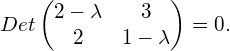

To determine the eigenvalues for this example, we substitute ![]() in equation (3) by equation (4) and obtain:

in equation (3) by equation (4) and obtain:

(5)

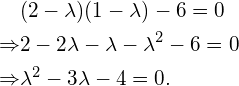

Calculating the determinant gives:

(6)

To solve this quadratic equation in ![]() , we find the discriminant:

, we find the discriminant:

![]()

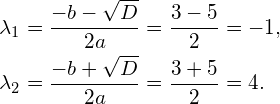

Since the discriminant is strictly positive, this means that two different values for ![]() exist:

exist:

(7)

We have now determined the two eigenvalues ![]() and

and ![]() . Note that a square matrix of size

. Note that a square matrix of size ![]() always has exactly

always has exactly ![]() eigenvalues, each with a corresponding eigenvector. The eigenvalue specifies the size of the eigenvector.

eigenvalues, each with a corresponding eigenvector. The eigenvalue specifies the size of the eigenvector.

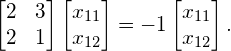

Calculating the first eigenvector

We can now determine the eigenvectors by plugging the eigenvalues from equation (7) into equation (1) that originally defined the problem. The eigenvectors are then found by solving this system of equations.

We first do this for eigenvalue ![]() , in order to find the corresponding first eigenvector:

, in order to find the corresponding first eigenvector:

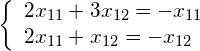

Since this is simply the matrix notation for a system of equations, we can write it in its equivalent form:

(8)

and solve the first equation as a function of ![]() , resulting in:

, resulting in:

(9) ![]()

Since an eigenvector simply represents an orientation (the corresponding eigenvalue represents the magnitude), all scalar multiples of the eigenvector are vectors that are parallel to this eigenvector, and are therefore equivalent (If we would normalize the vectors, they would all be equal). Thus, instead of further solving the above system of equations, we can freely chose a real value for either ![]() or

or ![]() , and determine the other one by using equation (9).

, and determine the other one by using equation (9).

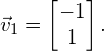

For this example, we arbitrarily choose ![]() , such that

, such that ![]() . Therefore, the eigenvector that corresponds to eigenvalue

. Therefore, the eigenvector that corresponds to eigenvalue ![]() is

is

(10)

Calculating the second eigenvector

Calculations for the second eigenvector are similar to those needed for the first eigenvector;

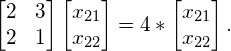

We now substitute eigenvalue ![]() into equation (1), yielding:

into equation (1), yielding:

(11)

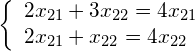

Written as a system of equations, this is equivalent to:

(12)

Solving the first equation as a function of ![]() resuls in:

resuls in:

(13) ![]()

We then arbitrarily choose ![]() , and find

, and find ![]() . Therefore, the eigenvector that corresponds to eigenvalue

. Therefore, the eigenvector that corresponds to eigenvalue ![]() is

is

(14)

Conclusion

In this article we reviewed the theoretical concepts of eigenvectors and eigenvalues. These concepts are of great importance in many techniques used in computer vision and machine learning, such as dimensionality reduction by means of PCA, or face recognition by means of EigenFaces.

If you're new to this blog, don't forget to subscribe, or follow me on twitter!

Summary

Article Name

What are eigenvectors and eigenvalues?

Author

Vincent Spruyt

Description

This article explains what eigenvectors and eigenvalues are in an intuitive manner. Furthermore, we manually perform the eigendecomposition of a simple 2x2 matrix as an example.

How To Solve For An Eigenvector

Source: https://www.visiondummy.com/2014/03/eigenvalues-eigenvectors/

Posted by: alfordbrebrugh.blogspot.com

0 Response to "How To Solve For An Eigenvector"

Post a Comment